From subtle reactions to understanding behavior

FaceReader

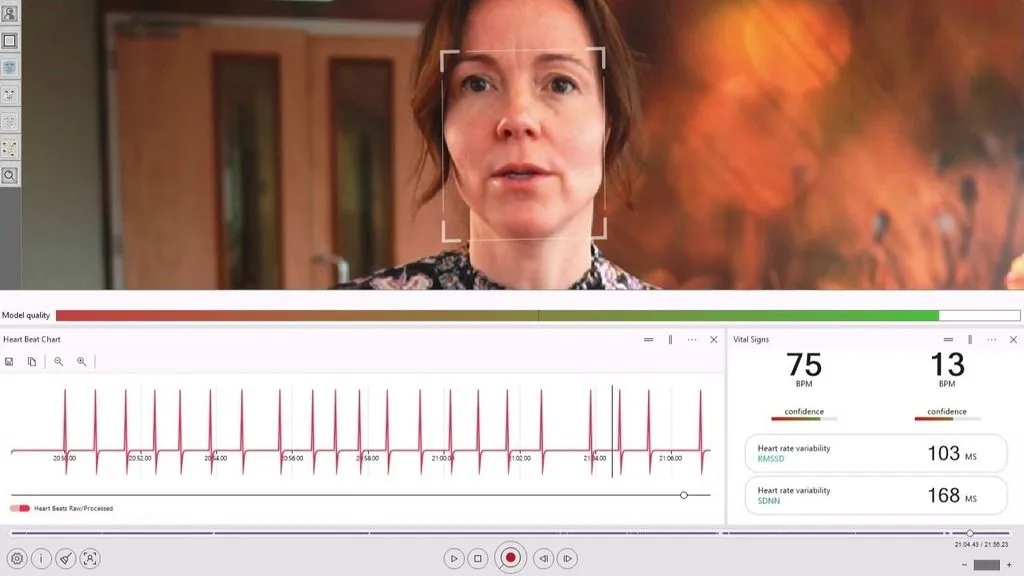

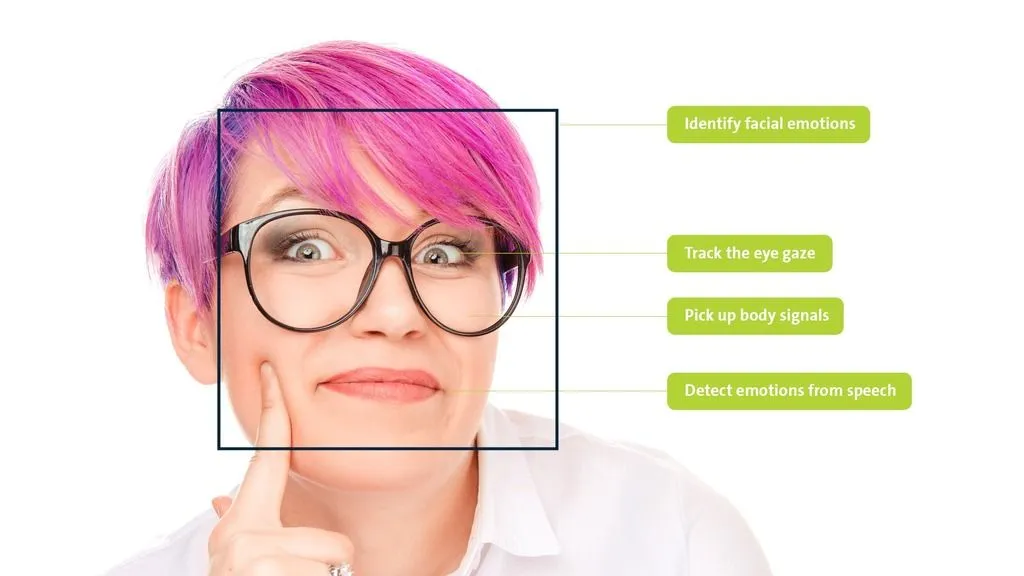

This plug and play software gives you a complete, contact-free view of facial expressions, vital signs, and gaze.

- From emotions to physiology to eye movement

- Without interrupting natural behavior

- Collecting instant results, with zero prep

Trusted by researchers around the world

Quick overview FaceReader

This short info sheet gives you a clear look at what FaceReader can do: analyze behavior using just a webcam, with no hassle.

You'll find an overview of key features like

emotion detection, gaze tracking,

and heart rate analysis, along with examples of how it's used in research

and education. A great starting point if you want to explore behavioral tools or

expand your research methods.

What FaceReader stands for

No sensors, no wires, no hassle

Capture authentic responses, completely non-invasive, through a simple webcam.

Immediate results, zero preparation

Simply open the software, use your webcam, and begin capturing data within seconds.

Proven accurate and validated in research

Scientifically validated and trusted worldwide for accurate and reliable results.

FaceReader is an excellent tool that we regularly use in our lab.

Dr. William Hedgcock

UNIVERSITY OF MINNESOTA, USA

Efficient coding with FaceReader

At the Social Behavior Lab at Western University, dr. Erin Heerey explores human behavior during social interactions. She uses a round robin design in her research.

Save hours of manual coding

Frame-by-frame expression analysis of her project would have taken 800 hours of manual coding. FaceReader did it in only 14 hours!

This has increased throughput, accuracy, and ease of scoring social behaviors. And it has also made it much easier to share data with researchers.

Setup options: SDK integration or online use

Integrate FaceReader with external applications

Need to easily integrate facial expression analysis into other applications? Using the FaceReader Software Development Kit (SDK) is the perfect solution. The SDK is available on request, based on a separate agreement. It works for Windows and Android, and can run on your PC or server.

You can also use the FaceReader API, which makes it easy to connect with the standard FaceReader for Windows software and gives you access to key FaceReader functions on your own PC.

Request your sdk via vicarvisionFaceReader Online: wherever there's internet

Connect to FaceReader Online and test participants anywhere around the globe. It is the ideal tool to get feedback from a large number of participants without inviting them to your facility. Perfect for when you want to study participants in their homes or on their mobile phones.

More about Facereader online

Frequently asked questions

How is FaceReader validated in research?

FaceReader has been validated extensively by different parties and researchers, unlike a lot of other facial expression analysis tools in the market, both freeware and paid tools.

Manual coding and expression databases

Researchers compared the results of the software with that of trained human coders.

In other studies, they compared FaceReader with the intended expressions of data from existing databases, like the Amsterdam Dynamic Facial Expression Set (ADFES).

Reliable analysis with FaceReader

Results consistently show that FaceReader is highly accurate in determining facial expressions.

What output do I get with FaceReader?

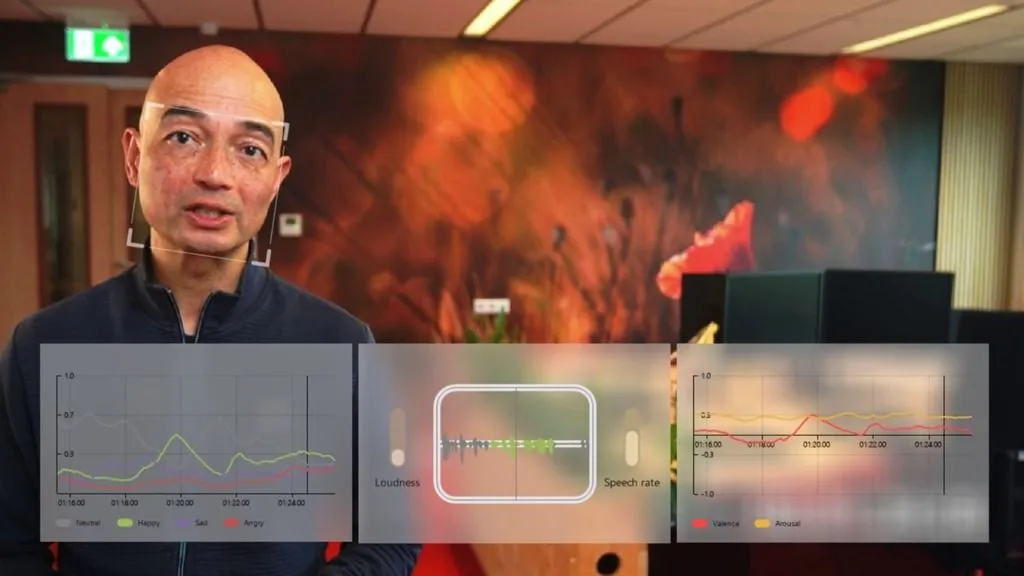

FaceReader offers realtime insights into facial expressions, Action Units, and the circumplex model of valence and arousal.

Visualize your results

The software immediately visualizes these results in line charts, bar graphs, or pie charts.

Want to look at something else? Easily choose which data you want visualized at any moment.

Advanced analysis options

Looking for more details? With in-depth project analysis you have the option to include group comparisons and personalized filters, helping you get a better data selection and overview.

Why should I use FaceReader Online?

Want to invite people from all over the world? FaceReader Online allows you to quickly test a large group of participants - from any location.

Measure emotions from anywhere

FaceReader Online is perfect for when you want to study participants in their natural environment, like at home or at school. All you need is a laptop with a webcam!

When to use FaceReader Online

FaceReader Online is ideal when doing user experience or market research.

For example, easily measure liking of a product, responses to an advertisement, or the usability of a website.

Why should I use FaceReader?

FaceReader is fast, flexible, objective, accurate, and easy to use. Want to learn more about the benefits for your research project? Discover why professionals from over 1,000 universities, research, and companies choose FaceReader.

What can I use FaceReader for?

From consumer and psychology research to usability studies and neuroscience - FaceReader will help you gain accurate facial expression data for your project. Learn more about how to apply FaceReader in your research.

How do I use FaceReader?

Learn more about the different ways to use FaceReader, as well as the best ways to set up your system. For example, you can work with different input sources, create your own expressions, and choose the license that works for you.

Learn more about FaceReader

Discover in-depth information about how to use FaceReader and learn how others benefit from the software. You'll also find relevant publications, as well as product overviews for specific research areas and markets.