How do I use FaceReader?

FaceReader helps to automate your research - quickly and easily. The user-friendly software allows you to use different input sources and system preferences, giving you the setup you need.

Discover options, practical tips, and how to's that will help you get the most out of your research.

Contact us

What does FaceReader offer?

FaceReaderTM can be used with different input sources. You can upload still images for analysis or analyze facial expressions from a pre-recorded video file. When analyzing from video, you can choose an accurate frame-by-frame mode or skip frames for high-speed analysis.

Save valuable time

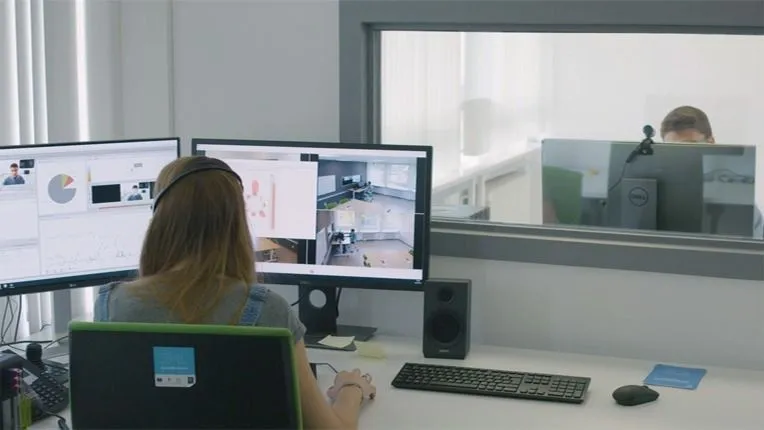

FaceReader enables you to analyze live and to record

video and audio

simultaneously. If you plan to analyze multiple videos, you can analyze them all at the

same time.

The software can also quickly detect interesting episodes, even in long series of events.

This way,

FaceReader saves you a lot of valuable time.

How does FaceReader work?

How does FaceReader accurately classify facial expressions? For your facial expression analysis, FaceReader uses these steps:

- Face finding – Uses a face-finding algorithm that is based on Deep Learning

- Face modeling – Makes an accurate artificial face model using almost 500 key points

- Face classification – Classifies facial expressions with trained artificial neural networks

What computer hardware do I need?

FaceReader will run on computers with the following minimum technical specifications:

- Windows 11 Pro (64-bit)

- Intel Core i5 8th generation or higher / AMD Ryzen 5 4000 series or higher

- Minimum of 8 GB RAM

- Support for AVX2 or higher

Learn more about FaceReader's methodology

Free white paper

Download this free white paper to learn more about:

- How FaceReader classifies facial expressions

- What types of data you can collect for your research

- How the software is validated

Since we started to use FaceReader in our work on situation-aware adaptive user interfaces, our research has taken a great leap forward.

Prof. Dr. Christian Märtin

AUGSBURG UNIVERSITY OF APPLIED SCIENCES, GERMANY

How do I collect my data?

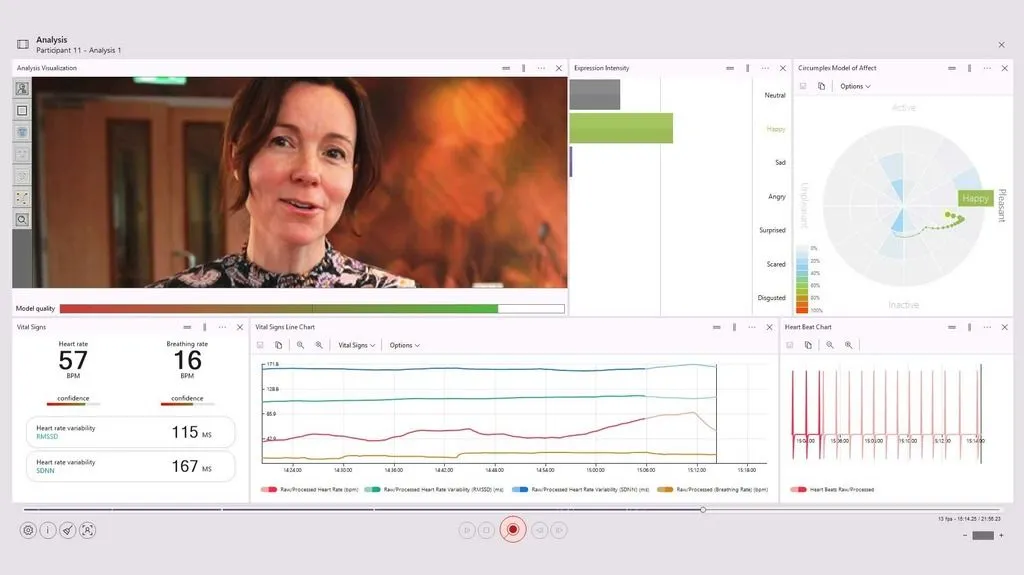

Accurate analysis for each participant

Tailor your facial expression analysis to a specific person using automatic calibration. You can either run the calibration before analysis or choose to calibrate continuously during the observation.

By doing so, you can correct person-specific biases towards a certain emotional expression, for example, when someone shows a lot of anger in their neutral facial state.

Create custom expressions

Custom expressions are facial expressions or mental states that you can define yourself, such as workload, pain, or embarrassment.

To create custom expressions, you can combine variables such as facial expressions, Action Units, heart rate, and heart rate variability. You can also use valence, arousal, and head orientation in your definition of a custom expression.

Privacy & ethics in FaceReader

FaceReader is installed on-site and adheres to strict privacy-by-design protocols. For example, the software offers you the option not to record the participant's face during analysis.

FaceReader is a software tool for scientific research. It is not capable of recognizing or identifying faces or people, and is therefore unsuitable for surveillance purposes.

Choose the license that works for you

You can use FaceReader with a hardware key (USB dongle) or a digital software license key. A fixed license is connected to a single designated computer, allowing you to use the software without an internet connection.

With a floating license, you can install the software on any computer and use it in any location with an internet connection - at the office, in the lab, or at home.

Why should I use FaceReader?

FaceReader is fast, flexible, objective, accurate, and easy to use. Want to learn more about the benefits for your research project? Discover why professionals from over 1,000 universities, research, and companies choose FaceReader.

What can I use FaceReader for?

From consumer and psychology research to usability studies and neuroscience - FaceReader will help you gain accurate facial expression data for your project. Learn more about how to apply FaceReader in your research.

Learn more about FaceReader

Discover in-depth information about how to use FaceReader and learn how others benefit from the software. You'll also find relevant publications, as well as product overviews for specific research areas and markets.

How do I expand my FaceReader setup?

FaceReader offers three flexible packages: Essential, Advanced, and Premium. Designed to match your research needs. Start with core emotion analysis and scale up to advanced features, multi-user access, and comprehensive tools as your projects grow.

Ready to take the next step?

We’re passionate about helping you achieve your research goals. Let’s discuss your project and find the tools that fit.

Contact us