Tools for the

Facial action coding system (FACS)

Many researchers code muscle movements to learn more about how and why the face moves. They use the Facial Action Coding System which provides them with a technique for the reliable coding and analysis of facial movements and expressions.

The Facial Action Coding System (FACS), first developed by Paul Ekman and Wallace Friesen in 1978 and revised by Ekman, Friesen, & Hager in 2002, is a comprehensive, anatomically-based system for describing anatomical movement of the face.

FACS coding

FACS coders describe every observable change in facial movement on the basis of Action Units (AUs). They indicate which AUs moved to produce the changes observed in the face. This makes FACS coding quite objective.

In scoring, it will be necessary to apply slow motion and frame-by-frame viewing to identify the AUs that occur, always alternating with real time viewing. As such, FACS coding is very time intensive.

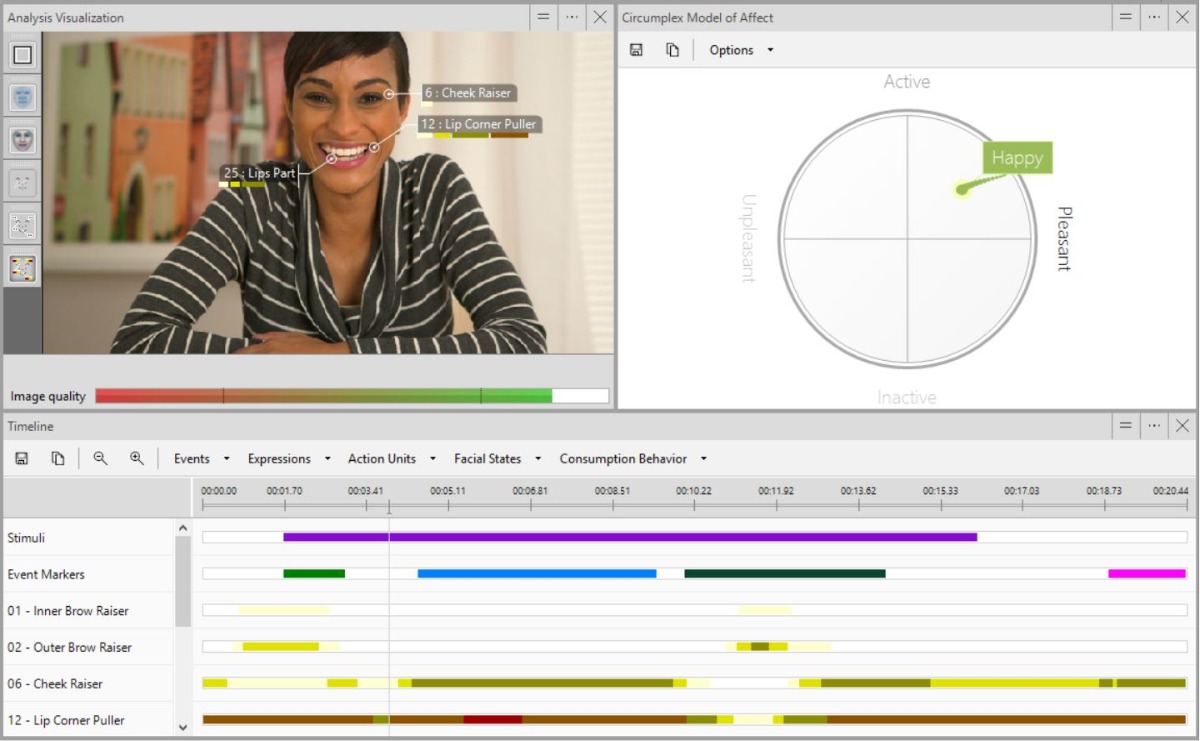

Automatically code facial actions

FaceReader™, the software for automatic recognition and analysis of facial expressions, offers you reliable automated facial action coding, which saves you valuable time and resources.

When an action is active, its intensity is displayed in 5 categories. Output will be presented to you on this scale with different colors and can be exported for further analysis in Excel, The Observer® XT, or another program of your choice.

FaceReader analyzes left and right Action Units (AUs) separately. This unique feature distinguishes the intensity of the active muscles at the left and the right side of the face.

What Facial Action Units look like

Recent advances in computer vision have allowed for reliable automated facial action coding. Below you can see the 20 Action Units offered in FaceReader as well as some frequently occurring or difficult action unit combinations.

Some images have been zoomed in on the area of interest to explicitly show what muscle movement corresponds to the specific Facial Action Unit.

AU 1. Inner Brow Raiser

Contributes to the emotions sadness, surprise, and fear, and to the affective attitude interest. Muscular basis: frontalis (pars medialis).

AU 2. Outer Brow Raiser

Contributes to the emotions surprise and fear, and to the affective attitude interest. Frontalis (pars lateralis) is the underlying facial muscle.

AU 4. Brow Lowerer

Contributes to sadness, fear, and anger, and to confusion. Muscles: depressor glabellae, depressor supercilii, and corrugator supercilii.

AU 5. Upper Lid Raiser

Contributes to surprise, fear, and anger, and to interest. Muscular basis: levator palpebrae superioris, and superior tarsal muscle.

AU 6. Cheek Raiser

Contributes to the emotion happiness. Orbicularis oculi (pars orbitalis) is the underlying facial muscle.

AU 7. Lid Tightener

Contributes to the emotions fear and anger, and to confusion. Orbicularis oculi (pars palpebralis) is the underlying facial muscle.

AU 9. Nose Wrinkler

Contributes to the emotion disgust. Levator labii superioris alaeque nasi are the underlying facial muscles.

AU 10. Upper Lip Raiser

Levator labii superioris, caput infraorbitalis are the underlying facial muscles.

AU 12. Lip Corner Puller

Contributes to the emotion happiness and contempt when the action appears unilateraly. Muscular basis: zygomaticus major.

AU 14. Dimpler

Contributes to the emotion contempt when the action appears unilateraly, and to boredom. Buccinator is the underlying muscle.

AU 15. Lip Corner Depressor

Contributes to the emotions sadness and disgust, and to confusion. Depressor anguli oris is the underlying muscle.

AU 17. Chin Raiser

This Action Unit contributes to the affective attitudes interest and confusion. The underlying facial muscle is mentalis.

AU 18. Lip Pucker

The underlying facial muscles are incisivii labii superioris and incisivii labii inferioris.

AU 20. Lip Stretcher

Contributes to the emotion fear. The underlying facial muscle is risorius w/ platysma.

AU 23. Lip Tightener

Contributes to the emotion anger, and to the affective attitudes confusion and boredom. Muscular basis: orbicularis oris.

AU 24. Lip Pressor

This Action Unit contributes to the affective attitude boredom. The underlying facial muscle is orbicularis oris.

AU 25. Lips Part

The muscular basis consists of depressor labii inferioris, or relaxation of mentalis or orbicularis oris.

AU 26. Jaw drop

Contributes to the emotions surprise and fear. Muscular basis: masseter; relaxed temporalis and internal pterygoid.

AU 27. Mouth Stretch

The underlying facial muscle are pterygoids and digastric.

AU 43. Eyes Closed

Contributes to the affective attitude boredom. The muscular basis consists of relaxation of Levator palpebrae superioris.

Combinations of action units

AU 1 - 2 - 4

Contributes to the emotions fear and can be recognized by the wavy pattern of the wrinkles across the forehead.

AU 1 - 2

Contributes to the emotion surprise and can be recognized by a smooth line formed by the wrinkles across the forehead.

AU 1 - 4

Contributes to sadness. Recognizable by a wavy pattern of the wrinkles in the center of the forehead. Eye-brows come together and up.

AU 4 - 5

Contribute to the emotion anger.

AU 6 - 12

Contributes to happiness. Notice the wrinkles around the eyes caused by cheek raising, also know as the "Duchenne Marker".

AU 10 - 25

Contributes to the emotion disgust. When AU10 is activated intensily, it causes the lips to part as the upper lip raises.

AU 18 - 23

Often confused as solely AU18. Notice the lips almost appear to be pulled by a single string outward (AU18) and then tightened (AU23).

AU 23 - 24

The AUs marking lip movements are often the hardest to code. The lips are being pushed together (AU24) and tightened (AU23)

Free white paper

Facial Action Coding System (FACS)

Coding facial actions is used in many different settings, for example in science, teaching, and by animators as well. It enables a greater awareness to subtle facial behaviors.

Download the free white paper and find out:

- how to measure AUs objectively

- which tools will provide you results

- all the benefits of using FACS

FaceReader is used worldwide at more than 1,000 universities (including 6 out of 8 Ivy League universities), research institutes, and companies in many markets such as psychology, consumer research, user experience, human factors, and neuromarketing.

Interesting publications

A diverse collection of scientific articles citing Noldus products are published in renowned journals each week. The following list is only a small selection of scientific publications about facial action coding system and/or facial expression analysis.

- Ekman, P.; Friesen, W. V.; Hager, J. C. (2002). Facial action coding system: The manual on CD-ROM. Instructor’s Guide. Salt Lake City: Network Information Research Co.

- Lewinski, P. et al. (2014). Predicting Advertising Effectiveness by Facial Expressions in Response to Amusing Persuasive Stimuli. Journal of Neuroscience, Psychology, and Economics, 7, 1-14.

- Schalk, J. et al. (2011). Moving Faces, Looking Places: Validation of the Amsterdam Dynamic Facial Expression Set (ADFES). Emotion, 11 (4), 907-920. https://doi.org/10.1037/a0023853.

Relevant blogs

Analysis of facial expressions of emotions in children

The study described in this guest blog post focuses on the facial expressions of emotions induced by affective stimuli in children aged between 7 and 14.

SUKIPANI: The magic word for making a smile

The SUKIPANI smile is an exercise to train the muscles you use while smiling. Dr. Sugahara explains the effect of the movements of the muscles and uses FaceReader to analyze the smiles.

English

English German

German French

French Italian

Italian Spanish

Spanish Chinese

Chinese