Solutions for

Emotion analysis

Emotions are what makes us human, and we all experience them. They are created by the brain and are related to interests and past experiences: they signal that interests are at stake, in a positive or negative way. Emotions cause your cortex to pay attention, since the main task of the brain is to keep us alive and well.

Emotion data provides crucial insights that allow researchers to gain insight in complex human behaviors in greater depth. Emotions can play a role in all kind of matters. For example, in the decisions we make whether or not to buy something, in food choices, and in how to interact with others.

Facial expression recognition software

Facial expression analysis software like FaceReader™ is ideal for collecting this emotion data. The software automatically analyzes the expressions happy, sad, angry, surprised, scared, disgusted, and neutral.

Additionally, FaceReader can recognize a neutral state and analyze contempt. It also calculates Action Units, valence, arousal, gaze direction, head orientation, and personal characteristics such as gender and age. Moreover, FaceReader comes with a range of custom expressions, as well as the option to create your own.

FaceReader software is very promising and sufficiently accurate to detect differences in facial emotion expressions induced by different tastes of food for different mood groups.

Prof. Dr. E. Bartkiene|Lithuanian University of Health Sciences, Lithuania

Objective assessment of emotions

Many researchers have turned towards using FaceReader to better provide an objective assessment of emotions. It is used worldwide at more than 1,000 universities (including 6 out of 8 Ivy League universities), research institutes, and companies in many markets such as psychology, consumer research, user experience, human factors, and neuromarketing.

Using the software eliminates biases and since it immediately analyzes your data and is very easy to use, it saves a huge amount of valuable time. According to a validation study, FaceReader shows the best performance out of the major software tools for emotion classification currently available.

Measuring emotions

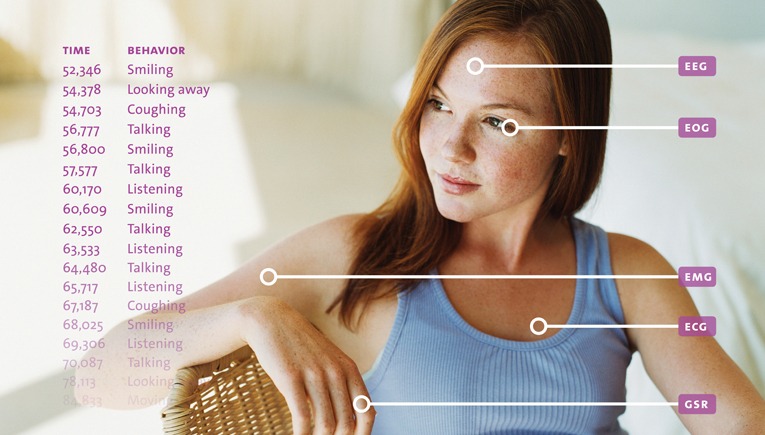

All emotions, whether they are suppressed or not, are likely to have a physical effect. Biometric research will bring these effects to the surface by studying subconscious processes related to attention, cognition, emotion, and physiological arousal.

For example, combine eye tracking and facial expression analysis to find out where the participant looked when frustrated, or, for example, what element in the video surprised the participant the most. Here, perfect synchronicity is key to matching the measurement of attention or cognitive workload to emotions.

Tiffany Andry: "I like to use these tools"

The Social Media Lab in Mons, Belgium brings together researchers, students, professionals, and professors from different disciplines (communication, marketing, journalism, computer science, etc.). Together they try to understand the digital world, train themselves in the use of new technology, and learn more and advice about new professional practices.

Free white paper

FaceReader Methodology Note

Download this free white paper and learn more on:

- What is FaceReader?

- How does FaceReader work?

- FaceReader's output

- Add-on Modules

- Validation

Interesting publications

A diverse collection of scientific articles citing Noldus products are published in renowned journals. The following list is only a small selection of scientific publications. Please contact us if you need more reference material.

- Jiang, L., et al. (2019). Can joy buy you money? The impact of the strength, duration, and phases of an entrepreneur’s peak displayed joy on funding performance. Academy of Management Journal, 62(6), 1848-1871. https://doi.org/10.5465/amj.2017.1423

- Stöckli, S., et al. (2018) Facial expression analysis with AFFDEX and FACET: A validation study. Behavior Research Methods, 50 (4), 1446-1460.

- Zempelin, S., et al. (2021). Emotion induction in young and old persons on watching movie segments: Facial expressions reflect subjective ratings. PLoS ONE, 16(6): e0253378. https://doi.org/10.1371/journal.pone.0253378.

Relevant blogs

Why a clear mask is essential for clearer communication

For people who are deaf or hard of hearing, it is essential to be able to see the movements of the mouth while communicating. With the help of clear masks they can access the full facial expressions.

Why you want to know if your customers are bored, and how to find out

As emotions run through everyday life, facial expression analysis is often used in consumer and behavior research. With FaceReader you can now detect affective attitudes as well.

English

English German

German French

French Italian

Italian Spanish

Spanish Chinese

Chinese