FaceReader

Set up your system

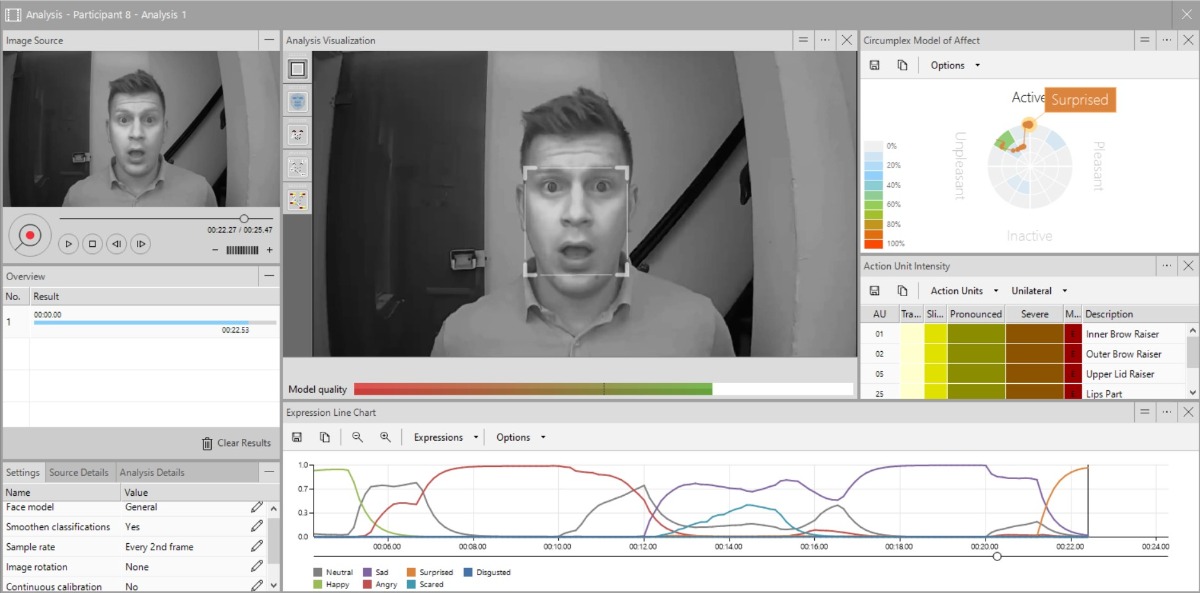

FaceReader is a user-friendly package which helps automate your research. It offers the possibility to use different input sources and system preferences.

Set up your system

FaceReader™ achieves the best performance if it gets a good (video) image. Both the placement of the camera and the lighting of the subject’s face are of crucial importance in obtaining reliable classification results.

You might wonder how to set up your FaceReader system for the best results. Let us guide you through the options with some practical tips and how to’s to help you make the most of your system.

To gain accurate and reliable data about facial expressions, FaceReader is the most robust automated system that will help you out.

-

Clear insights into the effect of different stimuli on emotions

-

Very easy to use: save valuable time and resources

-

Easy integration with eye tracking data and physiology data

Light and movement

You can achieve the best results with diffused frontal lighting on your test participant. Noldus offers illumination to optimize your set-up. You can follow the emotions of your test participants if their orientation, movement, and rotation are within certain limits.

On our Behavioral Research Blog, you can find several useful posts about FaceReader: 5 tips to get started and how to optimize your facial expression analysis.

Video, camera, infrared recording, or image

FaceReader can be used with different input sources. You can upload still images for analysis or analyze facial expressions from video, using a pre-recorded file.

When analyzing from video, you can choose an accurate frame-by-frame mode or skip frames for high-speed analysis. You can also switch to an USB webcam or IP camera. FaceReader enables you to analyze live and to record video and audio simultaneously.

If you plan to analyze multiple videos, you can analyze them all at one time. Once you have selected the videos, the software does the work for you.

Since FaceReader 8, it is possible to analyze infrared (IR) recordings. This allows you to use FaceReader in areas with low light conditions.

Automatic calibration

You can use the automatic calibration to tailor your facial expression analysis to a specific person. You can either run the calibration before analysis, or choose to calibrate continuously during the observation.

The individual calibration function enables you to correct person-specific biases towards a certain emotional expression. For example, when someone shows a lot of anger in their neutral facial state. You can create a calibration model using live camera input, images, or video of the test participant showing a neutral expression.

Choose your system preferences

The software can quickly detect interesting episodes, even in long series of events. You can choose to analyze the whole video or only parts of it. Moreover, when using the Project Analysis Module, you can add markers to interesting events. This module also allows you to compare groups. For example, you can compare differences in reactions to a commercial.

FaceReader Online integration

Want to test your experimental setup before your participants come to the lab? FaceReader Online allows you to quickly test your experiment with a pilot, including the stimuli, data quality, and questionnaires that best fit your goals.

Moreover, combining FaceReader and FaceReader Online enables to quickly test large groups of participants. Perform your tests from any location, even in the home of your participants. You can also analyze your FaceReader Online data in more detail by importing them into FaceReader, using the Action Unit module and Custom Expression Analysis.

License structure

FaceReader offers the additional possibility to use a digital software license key instead of a hardware key (USB dongle), to offer you maximum flexibility. To activate the software license key, you need an internet connection. Upon activating you can choose between:

- Fixed license - Use the software on a single designated computer. The license is connected to your computer and can be used without an internet connection.

- Floating license - Install the software on any computer and use it where and when you want. For example, on the computer in your lab and the next day on your laptop at home. For the use of the software, the computers need to be connected to the internet.

Easy integration with other programs

FaceReader data can also be exported to other programs. You can export both the detailed and the state log, including data about image quality and key point coordinates. Also, FaceReader works perfectly with The Observer XT, creating an integrated solution to study behavior, emotions, and physiological responses.

FaceReader's facial expression recognition and analysis software is even integrated in NoldusHub, our new tool for multimodal research.

This offers a unique solution for synchronization, integration, and analysis of FaceReader data with other data, including but not limited to physiological data, screen captures, and eye tracking data. This compatibility enables you to perform multimodal measurements: what user interface is the test participant looking at or which image is triggering an emotion?

English

English German

German French

French Italian

Italian Spanish

Spanish Chinese

Chinese