Our Users

Customer Stories

Our customers

Our customer list speaks for itself. Since our earliest days, we have worked together with start-ups to Fortune 500 companies and with small universities and the top 10 universities and research institutes around the world. Every day, since 1989, we have been devoting our innovation power to our customers, achieving great successes and building lasting relationships. Together we can elevate your research and build your ideal research set-up: designed with you and built by us.

Here is a sample of our customers:

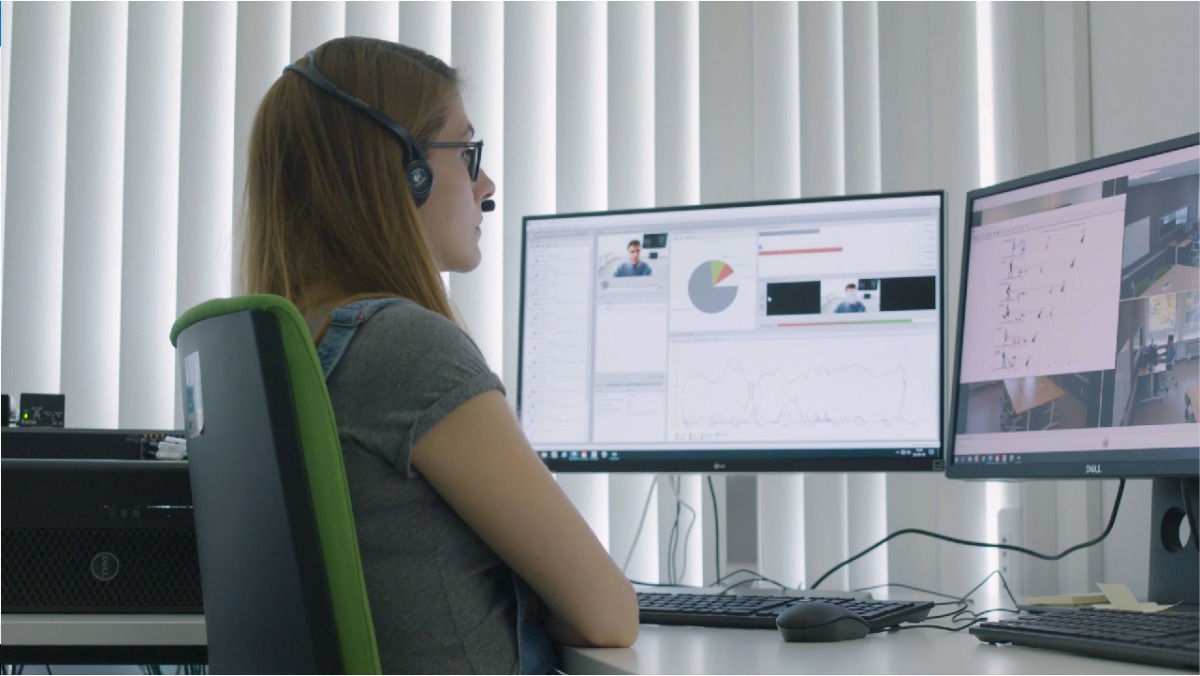

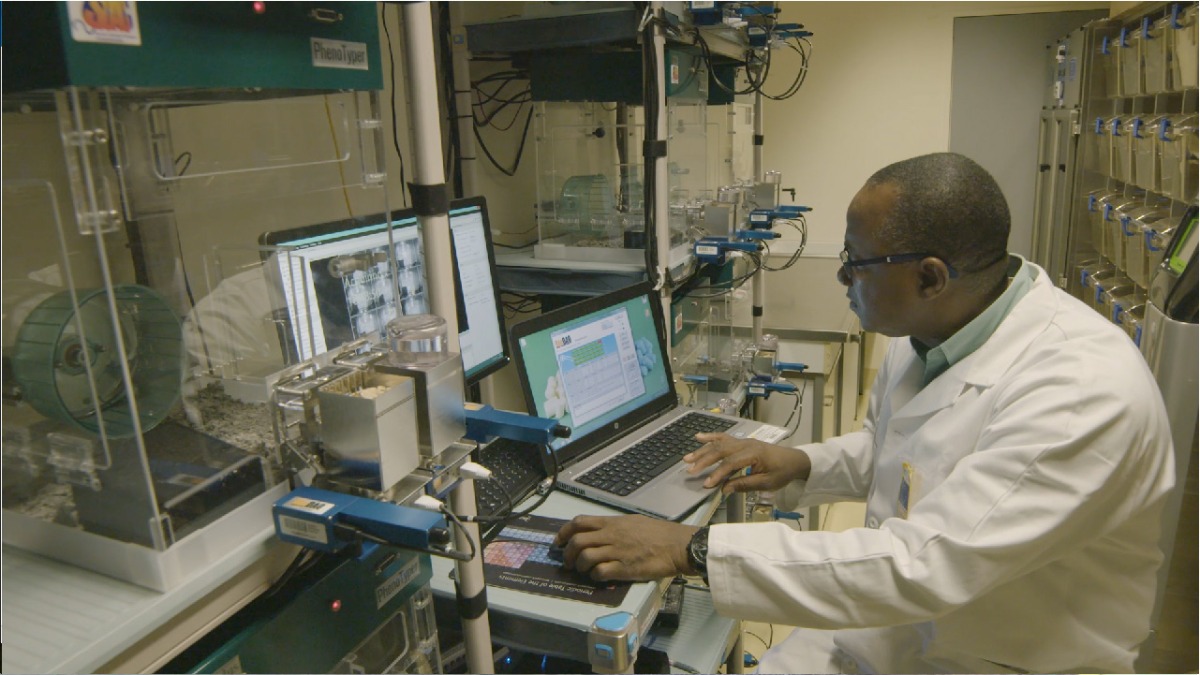

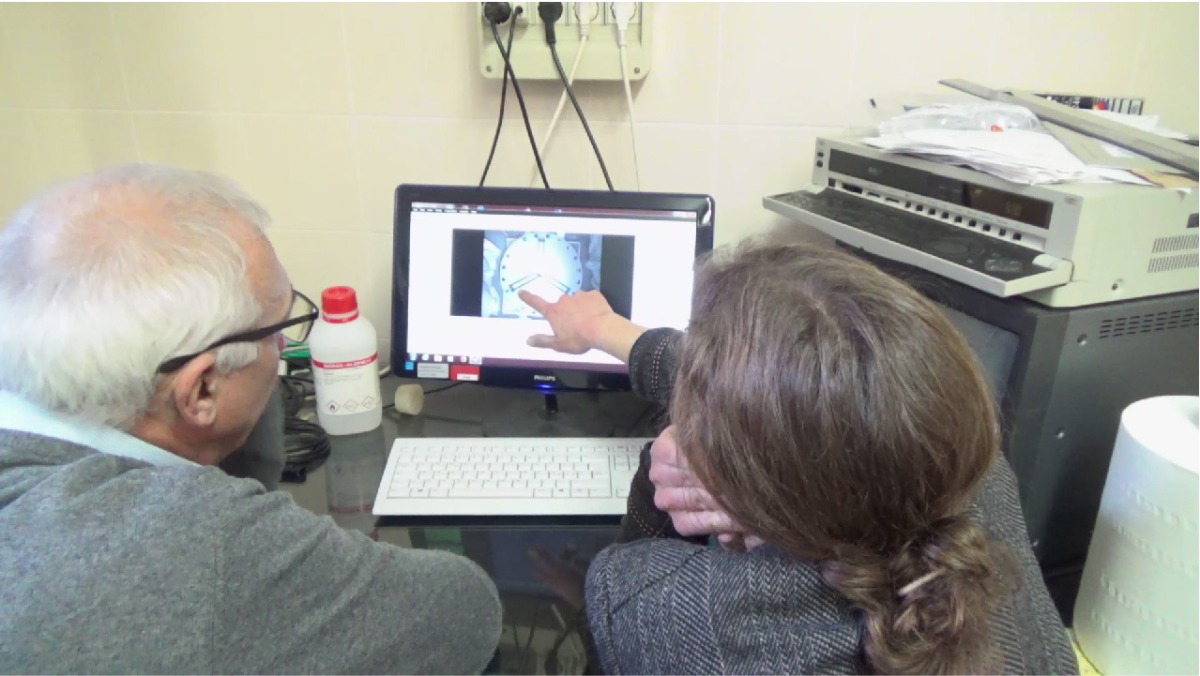

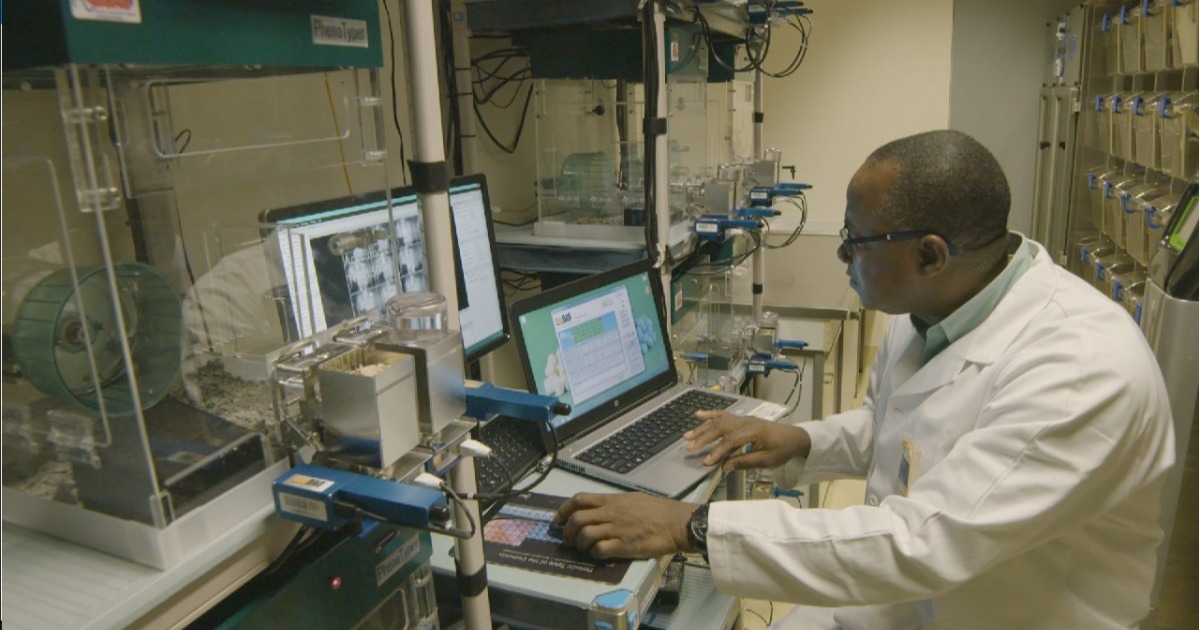

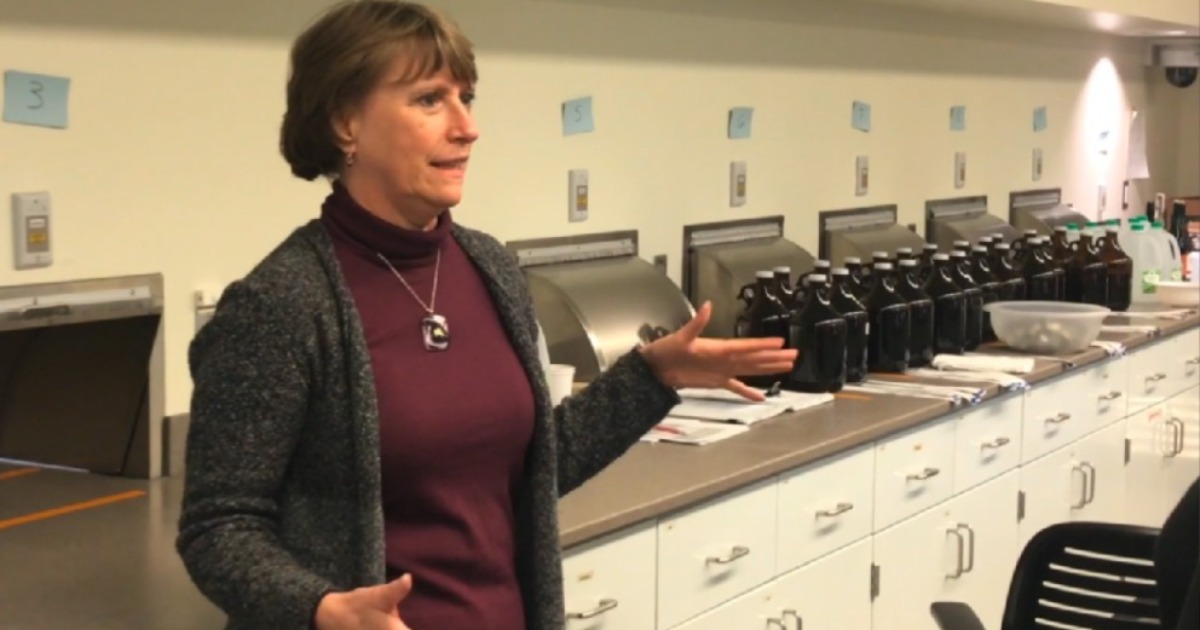

Take a tour through the labs of some of our customers

A large number of research, training, and simulation labs from all over the world, all recently built and in active use, take you on a tour and show you how they designed their rooms, what equipment they chose, and which software tools are used for data collection and analysis.

Noldus works together with customers around the world to provide them with the best possible tools for their research. Enjoy the tours!

Get your Noldus lab

Noldus works together with customers around the world to provide them with the best possible tools for their research.

When you purchase a Noldus lab, integration and synchronization of all equipment is part of our installation service. You can be sure that your hardware and software will work together, and that all your data is in sync. No matter what kind of research you are working on, we will help you get started quickly!

Recent blog posts

5 reasons to attend Measuring Behavior 2024

Measuring Behavior is the conference for all those interested in new methods and tools for measuring the behavior of people and animals. Have a look at all the great learning and networking opportunities you can have.

How stress and emotions can affect performance

Medical first responders need to undergo extensive trainings to maintain and enhance their skills. Malfait and her team developed a methodology to measure the management of stress and emotions.

English

English German

German French

French Italian

Italian Spanish

Spanish Chinese

Chinese